Introduction

The ICE Server can be installed on Amazon EKS using Amazon ECR (Elastic Container Registry). This setup allows Kubernetes to run on AWS without the need to install and operate your own Kubernetes control plane or nodes. The EKS provides a scalable and secure environment for deploying, managing, and scaling the ICE Server’s Kubernetes container.

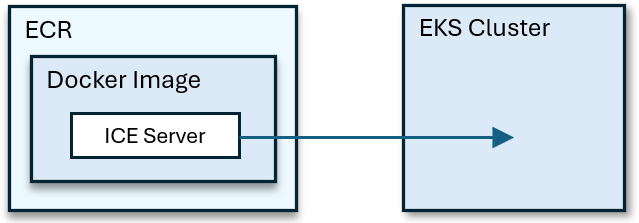

This document provides cloud-to-cloud deployment instructions. The source will be an Amazon Elastic Container Registry (ECR) encapsulating an ICE Server as a Docker Image. The target will be an Amazon Elastic Kubernetes Service (EKS) Cluster.

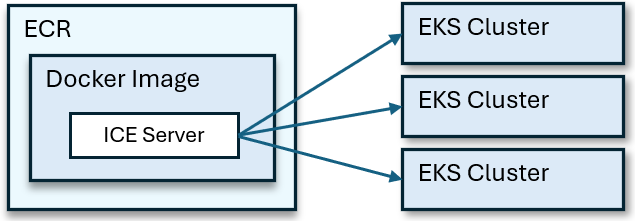

The following diagram provides a visualization of the process:

Once configured, you can deploy the same Docker Image on multiple EKS clusters.

Deployment Models

ICE OS (Operating System) supports various deployment models, including single-node and multi-node clusters on EKS. These models can be georedundant, ensuring high availability and resilience.

Production Deployment Node Size

For Production Deployments, the equivalent of an M6gd or M7gd or higher is recommended. As a general scaling guideline, m6i.2xlarge x 3 can support ~5,000 simultaneous users.